Reflecting on the overhyped promises of autonomous AI agents that fell short, from OpenAI’s bold predictions to enterprise realities, plus emerging 2026 trends in reasoning models and rising ethical concerns

At the start of 2025, the tech world buzzed with excitement: This would be the “Year of the AI Agent.” OpenAI CEO Sam Altman predicted that AI agents would “join the workforce” and “materially change the output of companies.” Industry leaders echoed the sentiment, envisioning autonomous systems handling complex tasks, automating workflows, and boosting productivity overnight. Yet, as the year ends, the reality is sobering. AI agents delivered incremental gains in narrow applications but failed to revolutionize work as promised. Studies showed high failure rates in pilots, reliability issues persisted, and widespread adoption stalled.

This isn’t a complete failure—it’s a hype correction. 2025 exposed the gaps between ambition and execution, setting the stage for more grounded progress in 2026 with advanced reasoning models, hybrid human-AI workflows, and heightened focus on ethics.

The Overhyped Promises: What Was Predicted for AI Agents in 2025

Early 2025 forecasts painted a transformative picture:

- Sam Altman’s Vision: In January, Altman wrote that 2025 could see the first AI agents entering the workforce, dramatically altering company productivity.

- Industry Consensus: Gartner and others dubbed it the “year of the agent,” with predictions of 33% of enterprise software incorporating agentic AI by 2028.

- Demo-Driven Excitement: Tools like OpenAI’s o-series, Anthropic’s Claude, and Google’s Gemini showcased agents booking flights, coding apps, or managing emails autonomously in controlled demos.

The allure was clear: Agents as “digital coworkers” freeing humans for creative work, promising trillions in economic value.

Reality Check: Why AI Agents Underperformed in 2025

Despite the hype, agents didn’t scale to transform workplaces. Key reasons emerged from reports, studies, and expert analyses:

1. Technical Limitations and Reliability Issues

Agents struggled with real-world complexity:

- Hallucinations and errors compounded in multi-step tasks.

- Upwork and MIT studies found agents failing straightforward workplace tasks without human oversight—success rates soared only with expert intervention.

- Legacy systems, poor data quality, and integration bottlenecks hindered deployment (Deloitte highlighted this as a core barrier).

2. Hype vs. Narrow Value

- Many “agents” were glorified chatbots or RPA with AI overlays—excelling at bounded, repetitive tasks but failing open-ended ones.

- Enterprise pilots: 95% saw no measurable ROI (MIT); 80-90% stalled (various reports).

- Reddit and expert communities called it a “hype correction,” with agents providing value in niches like customer support but not broad transformation.

3. Organizational and Human Factors

- Lack of governance, skills gaps, and fear of job displacement slowed adoption.

- Security risks: Agents with access could be tricked or cause breaches.

- Only 11-23% of organizations scaled agents meaningfully.

| Predicted Impact (Early 2025) | Actual Outcome (End of 2025) | Key Sources |

|---|---|---|

| Agents join workforce, change company output | Narrow adoption; pilots fail 80-95% | Altman blog; MIT, Upwork, Deloitte |

| Widespread autonomy in complex tasks | Reliable only in bounded scenarios | IBM, VentureBeat |

| Trillions in productivity gains | Incremental in niches; no broad revolution | McKinsey, Gartner |

Lessons from 2025: A Necessary Reckoning

The shortfall wasn’t unexpected to skeptics. As MIT Technology Review noted, it marked “the great AI hype correction.” Progress continued—reasoning improved with models like o3—but expectations outpaced capabilities. This mirrors past cycles (NFTs, metaverse), reminding us that transformative tech needs infrastructure, reliability, and human integration.

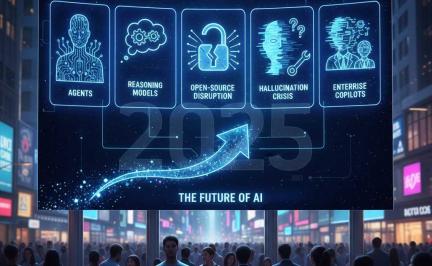

What Comes Next: Promising Trends for 2026

2026 shifts focus from raw autonomy to reliable, reasoning-driven AI:

1. Reasoning Models Take Center Stage

- “Thinking models” with inference-time compute (deliberating before answering) will dominate.

- Efficiency over scale: Fine-tuned small language models (SLMs) for specialized tasks, reducing costs and energy use.

- Multi-agent systems and “multiplayer AI” for collaborative workflows.

2. Hybrid Human-AI Collaboration

- Agents as assistants, not replacements—augmenting judgment with human oversight.

- Enterprise focus: Centralized strategies yielding measurable ROI.

3. Rising Ethical and Governance Concerns

- Accountability for autonomous decisions: Who’s liable for errors?

- Bias, privacy, environmental impact (energy consumption), and job displacement.

- Regulations intensify: Standardized frameworks, transparency mandates.

- Mental health and societal effects: AI’s psychological influence under scrutiny.

2026 predictions emphasize evaluation over evangelism—benchmarks, real-world impact, and sustainable governance.

Conclusion: From Hype to Mature Adoption

2025’s agent shortfall was disappointing but instructive. It exposed overhyped promises while highlighting genuine progress in reasoning and narrow automation. As we enter 2026, expect more pragmatic AI: Powerful reasoning models, ethical safeguards, and human-centric designs that deliver real value without the drama.

The transformation isn’t canceled—it’s evolving. Businesses prioritizing reliability, ethics, and hybrid workflows will lead.

What’s your take on AI agents in 2025? Optimistic for 2026’s reasoning era? Comment below!

Published on www.vfuturemedia.com | December 29, 2025

Leave a Comment